Study of the Week - Lessons from a Serious Medical Mistake

At the heart of one of modern medicine's greatest errors was confusing correlation and causation.

When I began medical training, experts felt they knew how to prevent heart disease in post-menopausal women. It was simple: extend their exposure to estrogen by giving hormone replacement therapy or HRT.

It made sense: estrogen worked in the liver to reduce levels of bad cholesterol. Rates of heart disease in women increased after menopause when estrogen levels dropped.

And there were studies—lots of them.

In 1992, the prestigious journal, the Annals of Internal Medicine, published a meta-analysis (a combination study of studies) of hormonal therapy to prevent heart disease and prolong life in post-menopausal women.

I made a slide of the main findings and conclusions. In the right column are the many observational studies of HRT. Notice that most have estimates less than 1.00, which means that the group of women who took HRT had lower rates of coronary disease.

In the next slide I’ve highlighted the results and conclusions. Note the causal verbs.

HRT does not associate with better outcomes, it decreases the risk for coronary heart disease. I remind you that these were non-randomized comparisons of women who took HRT vs those who did not.

This had a serious effect on creating a therapeutic fashion.

Journalist Gary Taubes writes that by 2001, 15 million women were taking HRT for preventive purposes.

The exact number isn’t important; what’s important is that lots of women took preventive HRT—for years—in the absence of a proper randomized trial.

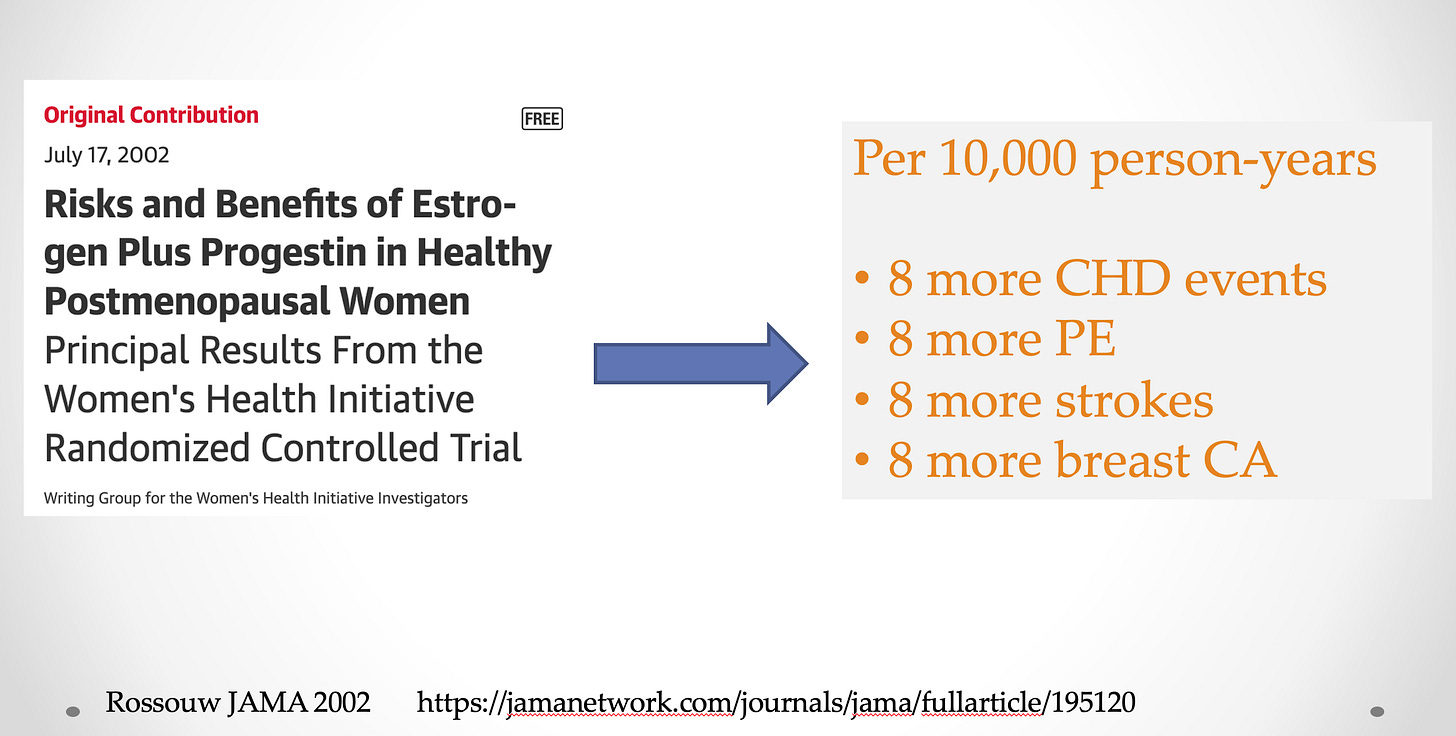

The trial finally came. JAMA published the Women’s Health Initiative trial in 2002.

It was a large trial with more than 8000 post-menopausal women in each group—HRT vs placebo. Their primary outcome was strong—heart attack or death due to heart disease. Breast cancer was a safety endpoint.

The results were stunning. Not only did HRT not prevent heart disease, it caused more heart disease, pulmonary embolisms, stroke, and breast cancer than placebo.

I did a little algebra to determine what a rate of 32 per 10,000 would look like in 2001 where 15 million women were taking HRT.

The numbers are staggering. In one year alone, HRT led to a nearly 50k women being harmed.

(An important caveat is that WHI looked at HRT for prevention.)

Two Main Lessons:

The first lesson is that bio-medicine is hard. No matter how much sense something makes, the likelihood is that it won’t work. From a Bayesian point of view, our prior expectations should mostly be pessimistic. This is especially true when it comes to preventing disease.

The second lesson is that observational research has serious limitations when it comes to making causal inference. Without random assignment, you don’t know if the comparison groups were similar.

In this case, women who decided to take HRT were likely healthier than women who did not. And it is those healthier attributes that led to the findings of lower risk.

Randomization fixes this flaw because it (mostly) balances characteristics that you can see and those that you cannot see.

And it doesn’t matter if these non-random studies contain large numbers of patients or if there are many studies. They can all be biased in the same way. A systemic bias is the same in a study of 100 or 10000 patients.

Cardiologist David Cohen succinctly states the existential problem with observational studies:

I want now to add two caveats about observational research.

It is not use-less. Observational studies can tell us things like what we are doing (e.g. the number of procedures), who we are doing these procedures on, and rates of complications. This is useful.

The second caveat is that there are groups, such as Miguel Hernan’s team, working on ways to simulate trials from observational studies. The important point here is that his target trial technique is best used in spaces where there are no randomized trials or when randomized trials are not feasible.

Conclusion:

Medicine is replete with examples where hubris led us to cause harm.

Most often, it is over-confidence in therapies that make sense and show positive results in observational studies. Always beware of non-randomized comparisons.

The antidote is randomization. The onus is on the proponents of new therapies to show that it works in a randomized trial. In the end, this mindset will lead to far less harm.

Unfortunately, as with opioids, we overcorrected on this issue. Absolute risk is something we need to consider as physicians. Let’s return to treating the individual, considering that person's values, risks, and potential benefits of a treatment. Maybe then, after the past embarrassing 2 years for our profession, we can regain the public’s trust.

A couple items. First, all “studies” begin with a single observation. It’s the genesis, the seed, for an hypothesis then a testable theory. So observational studies are powerful in that they guide innovation. A serious problem with studies looking for statistical power is the systematic introduction of error that can dilute or eliminate real effects that are actually real as observed in the case study. This happens often when the suspected treatment agent is only effective when interacting with a second unidentified factor, or there is an unidentified factor responsible for the observation. Jumping to a parametric study will only find no effect and the discovery is lost. Second, in the HRT study the assumption that E reducing LDLs as the mechanism reducing cardiovascular events is errant leading to the finding of no effect in the parametric study. Many studies have shown that cholesterol is not the relevant factor in CVD, the relevant factor is vascular inflammation. In fact, the relevant variable in the study mentioned is actually vitamin D status and its effects on reducing inflammation hence CVD. So, while E is a minor agonist of vitD, the heavy lifting with respect to CVD was vitD. So here we have that second unidentified, unappreciated, ignored? variable that is responsible for the observed effect. Don’t get me started on why vitD, a free, safe, readily available agent is systematically villified/ignored by the medical community. I think it has something to do with $$$.