Study of the Week -- Rebuttal of Last Week's Column

This week features two criticisms of last week's Study-of-the-Week

My column last week generated a bunch of civil discourse. Thank you. This is the core idea of Sensible Medicine!

So this week I want to expand on two important critiques of my interpretation of the provocative Brian Nosek paper that reported that the way one analyzes data can affect the results.

To review last week:

Brian Nosek brought together 29 teams of data scientists to analyze one data set to answer one question. Were professional soccer referees more likely to give red cards to dark-skin-toned players than light-skin-toned players?

The 29 teams of experts analyzed the same dataset in 29 different ways.

I first found it striking that there were actually this many ways to analyze data to answer a seemingly simple question. Doctors are never taught this.

But even more shocking to me was that two-thirds of the expert teams of data scientists detected a significant result and one-third found no statistical difference.

Here was the picture:

My take was that if there was this much flex in results of medical studies, we a) ought to be a lot less cocksure about evidence, and b) we should require researchers to submit their data and let independent experts analyze the data to confirm the results.

Two Criticisms of This Interpretation:

Dr. Raj Mehta countered my take by saying, basically, hey Mandrola, look at that picture again and note the overlap of those confidence intervals.

The orange rectangle highlights what Mehta is referring to.

His point is that if you ignore statistical thresholds, then the vast majority of these analytic approaches reported quite similar estimates. This, he writes, “is a good sign of reproducibility.”

This point leads me to the second criticism of my interpretation: that is, the idea of statistical thresholds.

Let’s go back to the first picture and focus on the yellow box noting that two-thirds of these methods led to statistically significant results and one-third did not.

The criticism here centers on the notion that a statistical threshold is indeed an arbitrary distinction.

If part of the 95% confidence interval overlaps with the dotted line of 1.00 (odds ratio of 1 means no effect), then it is considered non-significant.

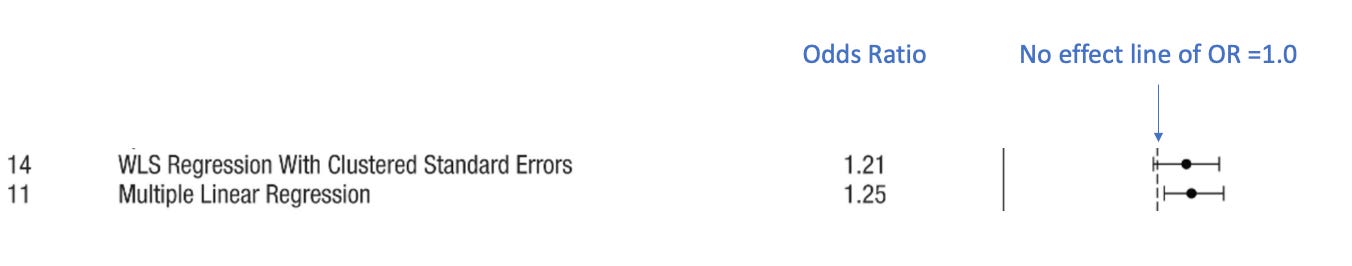

The picture below reveals the challenge of using only statistical thresholds in the interpretation of effect sizes. I highlighted the effect sizes and confidence intervals of team 14 and team 11.

Team 14 finds that the odds ratio that soccer referees give dark-skin-toned players more red cards is 1.21, or, roughly 21% more likely. Their confidence interval however barely overlaps with 1.0. (see the dotted line). This result would be interpreted as finding no significant difference.

Team 11 finds that the odds ratio that soccer referees give dark-skin-toned players more red cards is 1.25, or 25% more likely. But their confidence interval, which is nearly identical to team 14, does not cross 1.0. This result would be interpreted as finding a statistically significant result.

Two results that are nearly identical are interpreted (statistically) in opposite ways.

I am not arguing that statistical thresholds are useless; I am merely saying that they are arbitrary, and we should not reflexively equate significant results with “positive” findings and non-significant results with “negative” findings.

Conclusion:

The Nosek paper remains vitally important. It finds that the way a researcher analyzes data can affect the result. And, for better or worse, studies report results using statistical thresholds. That needs to be considered every time we see a result—especially when it is close to that threshold.

Yes, Nosek found that most of the 29 teams found roughly similar results. And, yes, two super-similar results could toggle an arbitrary statistical threshold. That doesn’t mean one is right and the other wrong.

The point of this week’s column is that consumers of medical evidence should not necessarily rely on the researcher’s interpretation. We need to stop and think. And actually look at the results.

I realize this all may seem hopelessly complicated. But it is not. Trust me.

In much the same way that we learn how to assess value when buying a pricey item, we can also learn the skills of evaluating medical evidence.

This is our goal.

As someone with a graduate degree in one of the applied sciences (exercise science) I can tell you it is hopeless for the average American to be able to interpret scientific studies. Heck, most people with undergrads in an applied science can't do it, never mind people with zero training in it. This is essentially why the CDC & Biden admin was able to dupe most Americans into the idea that masks were effective and everyone needed to be vaccinated. The truth is murkier and requires the ability to analyze the studies rather than just take the CDCs word for it. The answer to almost every question in the applied science is almost always "it depends" and rarely a sweeping "yes" or "no".

These last 2.5 years have highlighted the most important need to find people who are smarter than you, more experienced than you, and whose judgment you implicitly trust to help sort through the sand that is modern Medicine. I am grateful to have discovered the sensible medicine group