The Definitive Analysis of Observational Studies

Buckle up for this Study of the Week. It shocked me. You may never read another observational study in the same way.

The study question is red meat consumption and mortality. The results of this study, though, are not the exciting part. The exciting part is how these scientists went about studying the association between red meat and death. Yes. It’s the Methods!

Allow me an experiment this week. I will write about a fascinating paper. Then I will speak with the author and release our conversation later. You can assess how well (or poorly) a practicing cardiologist did analyzing a paper in the Journal of Clinical Epidemiology.

The objective of this group, led by Dr Dena Zeraatker, from McMaster University in Canada, was to present something called a specification curve analysis.

I know what you are thinking: Mandrola, in the third paragraph, you start with specification curve analysis, a complicated term that we have never heard of. Worry not. I will explain why it’s so nifty.

Specification curve analysis is similar to a multiverse analysis, meaning it’s a way of defining and implementing all plausible and valid analytic approaches to a research question. This time in nutritional epidemiology.

Take a moment and think about the methods section of a standard association study. Say blueberries and rates of stroke. The authors of such papers will write that we analyzed the data in this way. In other words: one way.

But. But. There are, of course, many choices of ways to analyze the data.

Since most observational studies are not pre-registered, you can imagine a scenario where authors actually did a number of analyses and published the one that yielded an association with a p-value of less than 0.05.

A few years ago, I wrote about Brian Nosek, a scientist at the University of Virginia who brought together 29 teams of data scientists, to analyze one data set to ask one question: did European soccer referees give more red cards to dark-skinned players.

Nosek found that a) expert data scientists chose many different ways to analyze the single dataset, and b) about 2/3rds found a significant association and 1/3rd found no significant association.

This came as a shock to me. The cardiac literature overflows with association studies, but each one uses one analytic method. What if different scientists chose to analyze the data in different ways. The results, and the resultant media explosion, would be much different.

Here is what Zeraatkar and colleagues did. First they reviewed a systematic review all observational studies addressing the red meat-death association. In this section, they documented the variations in analytic method (for instance, the choice of model and co-variates).

Second, they then listed all defensible combinations of analytic choices (there were a lot) to produce an exhaustive list of all the ways the data could be reasonably analyzed. Think exponents: just 3 choices for five aspects of the analysis equals 243 approaches (≈35).

Then they applied the specification curve analysis to one specific database—the NHANES (National Health and Nutrition Examination Survey) from 2007 to 2014. The goal was to study the association of unprocessed red meat on mortality.

Before I tell you the results, we should set out that nutritional epidemiology is especially susceptible to analytic method. There are few if any randomized trials in this space.

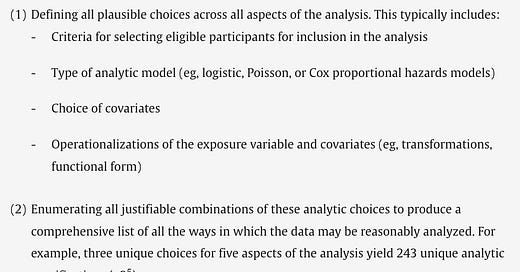

This screenshot explains the specification curve method:

Results

The systematic review (15 studies of 24 cohorts) yielded 70 unique analytic methods, each included different choices of covariates, different statistical choices, and different ways to group red meat exposure (continues vs quantiles, for instance).

The second step used the specification curve analysis of the NHANES data. They used all the analytic methods identified in the 15 primary studies from the systematic review.

Get this: based on the variation in possible analytic choices they calculated 10 quadrillion possible ways to approach the data.

That is obviously not possible, so they generated 10 random unique combinations of covariates. This yielded 1440 unique ways to analyze the association—basically, a random sample of the quadrillion possibilities.

They excluded about 200 analyses for implausibly wide confidence intervals and ended up with ≈ 1200 analyses. The picture is tough to see.

But here is the summary with words

The median HR was 0.94 (IQR: 0.83–1.05) for the effect of red meat on all-cause mortality. So that is not significant.

The range of hazard ratios was large. They went from 0.51 (a 49% improvement in mortality) to 1.75 (a 75% increase in mortality). Of all specifications, 36% yielded hazard ratios more than 1.0 and 64% less than 1.0.

As for significance at the p ≤ 0.05, only 4% (or 48 specifications) were statistically significant. And of these, 40 analytic methods indicated that red meat consumption reduced death and 8 indicated red meat led to increased death.

Nearly half the specifications yielded unexciting point estimates of hazard ratios between 0.90-1.10. When they restricted the analysis to women the results were similar. They found no other subgroup of note.

Then they did inferential statistics about the degree to which their findings across all specifications would be inconsistent with the null hypothesis (no association). All these p-values were high. Which means, assuming no red meat-mortality relationship, their specification curve analysis is not surprising at all.

Comments:

I find this amazing work. It extends the work of Nosek et al because a) it studies a more biologic question—red meat and death—vs a social science question, and b) it uses a lot more than 29 different analytic methods.

The results also provide a sobering view of nutritional epidemiology. Of 1200 different analytic ways (specifications) to approach the NHANES data, only 48 yielded significant findings. The vast majority found no significant association.

I would extend this paper beyond nutritional epidemiology. I mean, every time we read an observational study, in any area of bio-medicine, the authors tell us about their analytic method. It’s one method. Not 1200, or a 10 quadrillion.

Now consider the issue of publication bias wherein positive papers get published and null papers not so much.

Take the example of this paper.

There were 40 specifications that yielded a favorable red meat-mortality association and 8 that yielded a negative association. Red meat proponents could publish a positive one; vegetarian proponents could publish a negative one.

One question I will ask Dr. Zeraatker is why can’t we force authors of observational studies to at least produce results of multiple analytic choices.

Until that happens, I would remain skeptical of non-randomized association studies.

Humility and the embrace of uncertainty is the best approach to observational science. This study strongly supports that contention.

JMM

I concluded a while ago that observational studies aren’t worth the electricity it takes to store them. They give authoritarians a rational to rule over us: pressure us to spend money, force us to wear masks, take vaccines we don’t want, close down businesses. They do this even when RCTs are available that paint a very different picture.

One motivation is to create the illusion that they are protecting us from the dangers of the world, unlike their opponents. First decide the policy, then select from a near infinite number of studies that justify the policy. This isn’t a conspiracy, it’s just the lowbrow behavior that emerges from a less than ethical establishment.

The other great motivation is money. Observational studies bring lots and lots of money: useless tests, therapies, and pharmaceuticals. People waste hours of their lives watching meaningless videos, with lots of impressive jargon, claiming this or that based on observational studies.

The most disgusting aspect of this is that when a rare bird comes along who tries to warn the public: Vinay Prasad, Tracy Hoeg, Gilbert Welch, Robert Whitaker, Clare Craig, the attack machine tries to destroy them, and the attackers are usually MDs.

We live in predatory world; you had better learn to protect yourself.

I enjoyed reading this post of yours but cringed and had rigors when I saw you use the latest "buzzword du jour" -- I refer to this SPACE thing. All of a sudden, and for mysterious reasons, plenty of folks no longer talk or write about (for example) some development in the field of cardiology; instead they feel compelled to use the precious verbiage, "cardiology space". Ditto for just about every other discipline or work activity that can be imagined for the human race.

Instead of saying that I took my Allen Edmonds loafers to a competent shoe repair shop last week, it is apparently now de rigueur to say instead, "I took my Allen Edmonds loafers last week to a guy who is very active these days in the shoe repair space". If you think I am being hyperbolic here, please think again! The unctuous, highly paid cretins who dominate healthcare administration in our country have recently added the noun SPACE to their list of automatic bullshit terminology. In other words, using the noun "space" is now a big fad in the Healthcare Administration SPACE. God help us. Please.