Almost the AI Article I Want

Can a chest x-ray and artificial intelligence predict your cardiovascular future?

I’ve been using Tuesday to post the “Improving Your Critical Appraisal Skills” articles recently. Even though I’ve got the case-control study ready to go, I’m taking a quick break because I spent way too much time thinking about this article last week.

Several years ago, I was having coffee with one of my former students/advisees at the Plein Air Café in Hyde Park. This person is one of those who has lapped me in our careers. I’ll leave this person anonymous for several reasons but mostly because I feel like I’d be claiming some undeserved credit even noting that I once taught him/her.

Anyway, this person was telling me about a novel effort to use AI to predict readmission for a certain medical problem. The idea was that the software would examine patients’ medical records – the entire medical record: clinical notes; labs; radiology; and their admission histories – and learn to stratify people in terms of their risk for readmission. The idea was that interventions could then be tested in the high-risk groups. This was long ago enough that I was awed by the potential.

What I found awesome here was not only the use of machine learning but the possibility of identifying predictors hiding in plain sight. Might some of these turn out to be real, causal relationships that we would have considered biologically implausible? Might further study of these lead to paradigm shifts? One of us used the example, “maybe cat ownership is a powerful predictor of heart-failure readmission.”

I truly believe in the promise of AI in medicine. The current reality, for me -- in my tiny little corner of medicine -- has been a little disappointing. I admit that I do not follow the Medical AI literature closely.1 Most of what I see so far either worries me (AI answering patient’s questions) or impresses me (ability to interpret images), but in a less than profound way.

Last week, during my weekly journal skimming, I saw this article in the Annals of Internal of Medicine: Deep Learning to Estimate Cardiovascular Risk From Chest Radiographs: A Risk Prediction Study. The abstract made me thinking that a single chest X-ray could maybe replace a lot of our clinical acumen and our ASCVD risk calculator. This was exciting for to me for several reasons.

1. It would be amazing to be able to risk stratify any patient, based on a CXR completed for any reason, over the last 10 years.

2. The ASCVD calculator is not that accurate. It tends to predict that people are at higher risk than they are which leads to overuse of statins.2

3. I imagined that the tool was picking up more than just vascular calcifications and heart size. Could there be obvious things on a chest film – a procedure we have been doing since the 1890s – that contained a lot more information than we thought. Might the separation of the acromioclavicular joint be the most powerful cardiovascular risk factor yet discovered?

The article is interesting, just not as good as I’d hoped. Let’s dive in.

The work here started back during the PLCO (Prostate, Lung, Colorectal, and Ovarian) Cancer Screening Trial. In the portion of PLCO that examined lung cancer screening (and eventually gave us annual chest CTs for high-risk smokers) participants were randomized to CXR or chest CT. The CXRs of 80% of the participants randomized to the CXR arm were used to develop a model predicting cardiovascular death (as determined on the basis of the National Death Index, communication with next of kin, and annual questionnaires).

On the one hand this was an ideal population to train on: a large group of people who had had chest x-ray, were being followed, and were at high risk for CV disease. The only problem is that all these people were smokers, and they were not being followed for major adverse cardiovascular events (cardiovascular death, nonfatal MI, nonfatal stroke, or hospitalization for unstable angina -- MACE) which is what we use ASCVD to predict.

The results published in the Annals article was an external validation of this tool. The tool developed on the PLCO participants was used to analyze the CXRs of outpatients taken in 2010 or 2011 at 1 of 2 hospitals (The General or The Brigham). The primary outcome was incident MACE within 10 years of the CXR in populations who either did or did not have data from which ASCVD could be calculated. The researchers analyzed their data in a lot of ways – most of which don’t interest me that much, but there are few intriguing points.

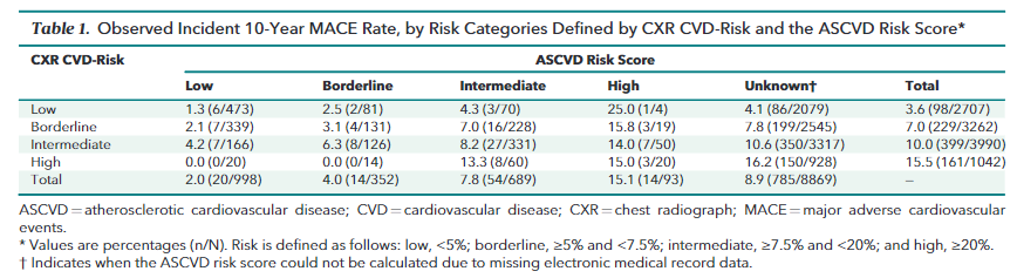

Table 1 shows how often the AI tool and the traditional ASCVD score agree and disagree and what people’s risk is in each group. Note the percentage translations for the risk strata in the caption.

The table most interesting to me – the one that places it as a 5/10 on the internationally validated Adam Cifu scale of AI article interest -- is supplement table 3. This table shows how good AI, reading a single CXR, is at risk stratifying people. The answer is... OK. Far from perfect, but close to what we currently use. Like the ASCVD, AI tends to overestimate risk.

This article is beginning to go where I am interested in AI going in the future. It tells us that AI can look at data, in this case a CXR3, and do nearly as good we can do with a clinical evaluation of a patient, an evaluation that uses information from the history and laboratory tests. I am looking forward to a time when AI can do better than we can do now. I am most looking forward to AI telling us how it is doing what it is doing so we can learn.

Of course, the worry is that AI won’t share the knowledge and use it against us – a post for the future.

Eric Topol, who covers and writes a lot about the field, is worth following if you are interested. Where I tend towards pessimistic cynicism, I think he tends towards breathless optimism. This article by him is a terrific place to begin.

I understand that there is incredible complexity hidden in that statement and could be a subject of a point counterpoint post.

Is a CXR datum or data? I guess that is the point here. Probably datum to a human and data to a computer.

“I am looking forward to a time when AI can do better than we can do now. I am most looking forward to AI telling us how it is doing what it is doing so we can learn.” If AI could do what we once could but better and faster, why would we need to learn?

I’m not sure anyone is playing out the endgame here (except for those worried about some malevolent AI like you alluded to at the end of the essay). What are we hoping for? Like, what would be the best outcome of AI development? That machines do all the work we currently do?

It feels a bit like the promises made to families when household appliances were invented. “Think of how much time you’ll save with this vacuum cleaner!” Now I’m not downplaying the importance of the vacuum cleaner, but more technology has just made us more harried and anxious.

Byung-chul Han argues that this feeling has come upon us because time lacks rhythm and an orienting purpose. We whizz around from event to event, never contemplating, never lingering.

Do we really think AI will make healthcare more humane? What technical development in the last 200 years has given us an indication that’s the direction we’re going? I worry AI will degrade the quality of our work. What are we hoping for? And on what basis can we hope to retain what’s good in any of our experiences - either the practice of medicine or the experience of receiving healthcare?

I have been underwhelmed by AI's contributions in the cardiovascular field and was not impressed by this paper. My focus is on individual ASCVD risk and that assessment comes most logically from looking directly for ASCVD (atqheroscleroc plaque) in the coronary arteries.

The AI tool for this that most impresses me takes data from coronary CT angiograms on the amount of soft and calcified plaque in an individual's coronary arteries and is called Cleerly. (https://www.jacc.org/doi/10.1016/j.jcmg.2023.05.020)

As to Eric Topol, who, as you say tends towards breathless optimism. because he lacks a filter for weak AI (and observational COVID) studies I don't recommend readers follow him

(https://theskepticalcardiologist.com/2019/08/10/are-you-taking-a-statin-drug-inappropriately-like-eric-topol-because-of-the-mygenerank-app/)

AP