Artificial Intelligence in Clinical Medicine: Beyond Headlines

A framework for AI tools in patient care, and an appraisal of the latest “AI beats doctors” headline

None of us who think about the practice of medicine can avoid thinking about AI. I have gone from optimism to pessimism to a state of terror. In this essay, I appreciate how Dr. Shahriar articulates the applications of AI and his critical appraisal of a recent article. I also love the way he describes the task of clinical practice.

Adam Cifu

Artificial intelligence (AI) is a broad term that describes how computers and machines can be programmed to perform tasks that usually require human intelligence, like recognizing patterns, understanding language, or solving complex problems. From virtual assistants on our phones to recommendation algorithms on streaming platforms, AI is already part of our daily lives. In clinical medicine, AI has the potential to reshape patient care. Broadly, I like to group clinical AI tools into two buckets:

A. those performing focused, well-defined tasks, such as image interpretation, risk modeling, or documentation (e.g., scribing)

B. those supporting more complex and nuanced processes, like medical decision making

Examples of (A) comprise most of the research being published in the hot and novel AI-dedicated publications at top medical journals, such as NEJM AI or JAMA AI. This is because in domains like radiology or pathology, a ground truth often exists. For example, a diagnosis of celiac disease requires a biopsy of the small intestine showing certain findings under the microscope. These findings, which pathologists are trained to recognize, are the ground truth against which the AI can be trained, and the ground truth is shielded from the “messiness” of everything else that makes up clinical medicine.

Examples of (B) are far less common. To those of us regularly caring for patients, this is not surprising. Medical decision making (diagnosis and management) is the hardest part of our job. We have the privilege of meeting patients and their symptoms when they need us. This is where “messiness” takes center stage. Our job is to gather information, introduce our knowledge and tools, educate and foster trust, navigate uncertainty, make diagnoses, and together derive sensible plans that optimize the quality and/or duration of an individual patient’s life. The pace and intensity of this work are unique to each case. Still, irrespective of the setting, I cannot overstate the importance of the physician-patient relationship in this process.

Luckily for my generation, AI tools will be able to assist us with some of these complex tasks. Why are we lucky? Is AI not coming for our jobs? My answer has two parts. First, I care about my patients and know that the right tool, in the right setting, helps me provide better care. Second, I have cared for enough sick patients to know that there are parts of my job that technology will never replace.

Unfortunately, the only thing bigger than the true potential of AI in medicine seems to be the media hype. What better headline for the contemporary American consumer than “AI outperforms doctors?” I recently stumbled upon the latest from venture capitalist and Wix chairman Mark Tluszcz, on X. In his defense, he is an investor and wants to see the company (K Health) succeed. The study received widespread national media attention.

Study Overview

The study, published in the Annals of Internal Medicine, is titled Comparison of initial Artificial Intelligence (AI) and Final Physician Recommendations in AI-Assisted Virtual Urgent Care Visits. Researchers evaluated how a novel AI decision-support tool, K Health, could assist physicians in virtual urgent care visits. This AI tool gathers information through a brief app-based triage chat (about 25 questions, 5 minutes) and the electronic medical record. When confident, it provides recommendations for diagnosis and management before the virtual visit. After the visit, the researchers compared AI recommendations with urgent care physicians’ medical decisions.

They selected 733 of 1,023 total visits (72%) with common complaints such as respiratory and urinary symptoms. From there, they excluded 94 (13%) with incomplete records, another 133 (21%) with low AI confidence, and then 45 (9%) where the managing clinician was not a physician, leaving 461 of 733 (63%) cases for the study.1

The subsequent methods and results are complex. First, they looked for perfect alignment between the urgent care physician and AI and called this the “concordant” group. The concordant group comprised 262 of 461 (57%) cases. The remaining 199 (43%), called the “non-concordant” group, were misaligned in at least one way. All “non-concordant” and a subset of “concordant” cases were then scrutinized by physician experts (adjudicators), who classified both AI and urgent care physicians’ decisions as optimal, reasonable, inadequate, or potentially harmful. A third adjudicator was consulted for disagreements of > 1 category (i.e., optimal vs inadequate; reasonable vs potentially harmful) which occurred in over half of the “non-concordant” cases (114/199) but rarely when there was concordance. These methods resulted in 684 paired scores (one score for AI and one score for MD) for 284 adjudicated cases.

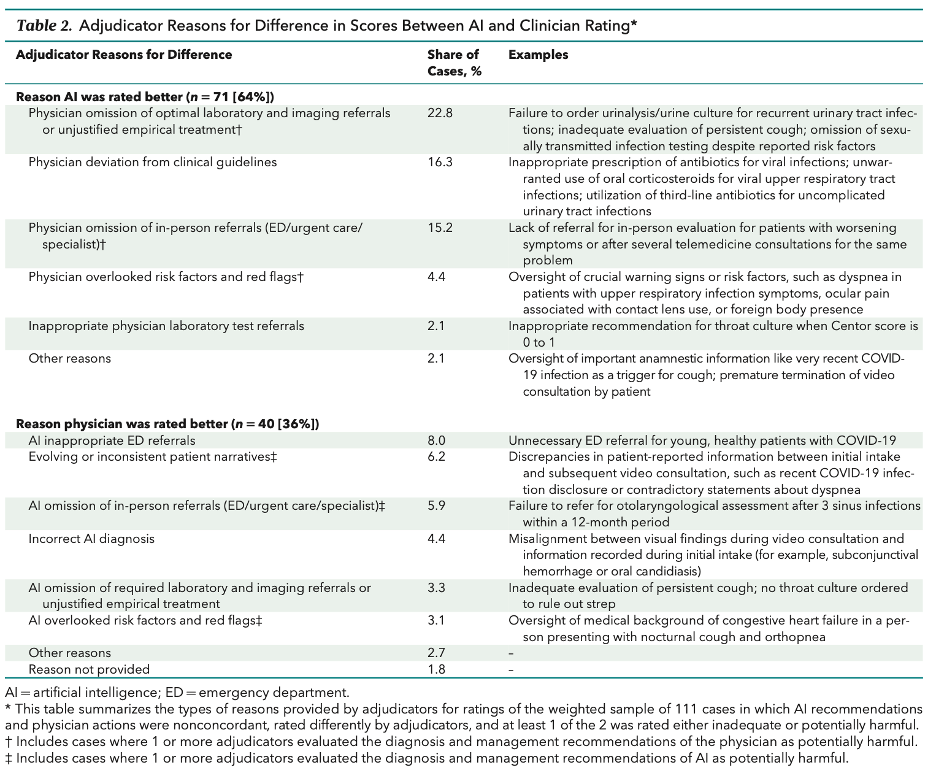

Their analysis centered on how AI and physician decisions diverged. Optimal or reasonable medical decisions were made by the AI in 86% of cases, compared to 79% for physicians. Among the 199 total “non-concordant” cases, 111 (56%) had at least one inadequate or potentially harmful recommendation (from either the physician or the AI). The inferior recommendation more often came from physicians (71/111; 64%) than from AI (40/111; 36%). The authors explore reasons for these observations in Table 2.

The authors provide a nice discussion. Their main conclusion was not “AI beats doctors” but rather “our findings suggest that a well-designed AI decision support tool has the potential to improve clinical decision making for common acute complaints.” I absolutely agree. It would be fantastic to have an AI assistant who can reliably do some homework and provide meaningful details and suggestions prior to a relatively simple encounter, and this study is a nice first step.

However, in response to headlines, some critical limitations cannot be overlooked:

1. Narrow sample and setting

This study curated the simplest complaints (mainly respiratory/urinary) in the simplest setting (virtual urgent care). This design makes sense when considering the task at hand, but our conclusions (mainly in the media) cannot exceed the narrow scope.

2. The AI (at least this one) pleads the fifth, one-fifth of the time

Even after curating simple complaints and excluding incomplete records, the AI did not have sufficient confidence to provide recommendations in over 1 in 5 cases (21%). It’s great that the researchers reported this figure. In fact, I am very interested in determinants of confidence (or its lack thereof), but this was not discussed.

3. Adjudication process – obvious limitations

Experienced physicians (adjudicators) who determined right vs wrong were not blinded, meaning they knew if a recommendation was coming from the human physician or AI. This obviously may skew results toward AI given the company sponsoring the study (K Health) was involved in data extraction, analysis, and adjudication; eight employees are listed as authors. The authors appropriately discuss this limitation.

4. Adjudication process – less-obvious but critical limitations

There are often multiple ways to address a concern or symptom. In over half of the “non-concordant” cases – the focus of the analysis – there were large disagreements (at least 2 categories, such as optimal vs inadequate; reasonable vs potentially harmful) requiring a third adjudicator and committee review. Consensus was encouraged but not required, and after the review, all three adjudicators recorded their final ratings.

Despite the high prevalence of large disagreements for these relatively simple cases, and the availability of committee review data (as mentioned in the Methods and Supplement 1), neither the specifics of the disagreement nor the percentage reaching consensus were provided. Why were there such large disagreements for such simple cases? Who benefited on the net (AI or human) from the committee review? These details are critical considering final ratings from cases that went to committee review accounted for over half of the primary data, and the previously discussed adjudicator limitations (employees, authors, and unblinded).

Final thoughts

For simple complaints in a busy and low-acuity virtual urgent care setting, when inputs are sufficient, this AI system showed early promise. It was able to efficiently triage, scour the electronic record, and provide doctors with evidence-based suggestions. I look forward to seeing how my AI colleague continues to evolve, because despite what headlines may suggest, we are not rivals – we’re collaborators.

Arman Shahriar, M.D., is a graduating resident physician in internal medicine at the University of Chicago. He enjoys running and playing soccer – activities his future AI colleagues tragically will never be able to join him in.

Percentages reflect moving denominator based on provided sample construction diagram.

AI has a couple advantages over human physicians. AI doesn't care what its patient satisfaction scores are and will not suffer if it is sued for malpractice.

If I could exclude 21% of cases in which I have ‘low confidence’ in the assessment, my clinical decision making would look stellar. These are the cases that differentiate good from average clinicians. In this study, AI gets a pass on these cases. So it’s hard to say based on this study how AI decision making does when it matters most.